Uncategorized

Macroeconomics at 100

Has macroeconomics progressed over the past 100 years, or are we merely treading water? There are good arguments for both sides. Before considering macroeconomics,…

Has macroeconomics progressed over the past 100 years, or are we merely treading water? There are good arguments for both sides. Before considering macroeconomics, I use an analogy in the field of urban planning. Then I’ll argue that macro looks a lot better if we view it as a series of “critiques”, not a series of models.

In the middle of the 20th century, city planners favored replacing messy old urban neighborhoods with modern high rises and expressways. Here’s Le Corbusier’s plan for central Paris:

Today, those models of urban planning seem almost dystopian. What endures are the critiques of the modernist project, such as the work of Jane Jacobs.

I believe that macroeconomics has followed a broadly similar path. We’ve developed lots of highly technical models that have not proved to be very useful, and a bunch of critiques that have proven quite useful.

Many people would choose 1936 as the beginning of modern macroeconomics, as this is when Keynes published his General Theory. I believe 1923 is a more appropriate date. This is partly because Keynes published his best book on macro in 1923 (the Tract on Monetary Reform), but mostly because this was the year that Irving Fisher published his model of the business cycle, which he called a “dance of the dollar.”

[BTW, Here’s how Brad DeLong described Keynes’s Tract on Monetary Reform: “This may well be Keynes’s best book. It is certainly the best monetarist economics book ever written.” Bob Hetzel reminded me that 1923 is also the year when the Fed’s annual report first recognized that monetary policy influences the business cycle, and they began trying to mitigate the problem. And it was the year that the German hyperinflation was ended with a currency reform.]

The following graph (from a second version of the paper in 1925) shows Fisher’s estimate of output relative to trend (T) and a distributed lag of monthly inflation rates (P). Many economists regard this as the first important Phillips Curve model.

Fisher argued that causation went from nominal shocks to real output, which is quite different from the “NAIRU” approach to the Phillips Curve more often used by modern macroeconomists—which sees a strong labor market causing inflation.

Today, people tend to underestimate the sophistication of pre-Keynesian macroeconomics, mostly because they used a very different theoretical framework, which makes it hard for modern economists to understand what they were doing. In fact, views on core issues have changed less than many people assume. During the 1920s, most elite macroeconomists assumed that business cycles occurred because nominal shocks impacted employment due to wage and price stickiness. Many prominent economists favored a policy of either price level stabilization (Fisher and Keynes) or nominal income stabilization (Hayek).

The subsequent Keynesian revolution led to a number of important changes in macroeconomics. In my view, four factors played a key role in the Keynesian revolution (which might also be termed the modernist revolution in macro):

1. Very high unemployment in the 1930s made the economy seem inherently unstable—in need of government stabilization policy.

2. Near zero interest rates during the 1930s made monetary policy seem ineffective.

3. Increases in the size of government made fiscal policy seem more powerful.

4. A move from the gold exchange standard to fiat money made the Phillips Curve seem to offer policy options—“tradeoffs”.

While I believe that the implications of these changes were misunderstood, they nonetheless had a profound impact on the direction of macroeconomics. There was a belief that we could construct models of the economy that would allow policymakers to tame the business cycle.

Most people are familiar with the story of how Keynesian macroeconomics overreached in the 1960s, leading to high inflation. This led to a series of important policy critiques. Milton Friedman was the key dissident in the 1960s. He argued:

1. The Phillips Curve does not provide a reliable guide to policy trade-offs.

2. Policy should follow rules, not discretion.

3. Interest rates are not a reliable policy indicator.

4. Fiscal austerity is not an effective solution to inflation.

But Friedman’s positive program for policy (monetary supply targeting) fared less well, and is now rejected by most macroeconomists.

Bob Lucas built on the work of Friedman, and developed the “Lucas critique” of using econometric models to determine public policy. Unless the models were built up from fundamental microeconomic foundations, the predictions would not be robust when the policy regime shifted. As with Friedman, Lucas was more effective as a critic than as architect of models with policy implications. It proved quite difficult to create plausible equilibrium models of the business cycle.

New Keynesians had a bit more luck by adding wage and price stickiness to Lucasian rational expectations models, but even those models were unable to produce robust policy implications. Here the problem is not so much that we are unable to come up with plausible models, rather we have many such models, and we have no way of knowing which model is correct. In practice, the real world probably exhibits many different types of wage and price stickiness, making the macroeconomy too complex for any single model to provide a roadmap for policymakers.

Paul Krugman’s 1998 paper (It’s Baaack . . . “) provides another example where the critique is the most effective part of the model. Krugman argues that a central bank needs to “promise to be irresponsible” when stuck in a liquidity trap, although it’s hard to know exactly how much inflation would be appropriate. The paper is most effective in showing the limitations of traditional policy recommendations such as printing money (QE) at the zero lower bound. Just as the work of Friedman and Lucas can be viewed as a critique of Keynesianism, Krugman’s 1998 paper is (among other things) a critique of the positive program of traditional monetarism.

That’s not to say there’s been no progress. Back in 1975, Friedman argued that over the past few hundred years all we had really done in macroeconomics is go “one derivative beyond Hume”. Thus Friedman’s famous Natural Rate model went one derivative beyond Fisher’s 1923 model. There’s no question that when compared to the economists of 1923, we now have a more sophisticated understanding of the implications of changes in the trend rate of inflation/NGDP growth. That’s not because we are smarter, rather it reflects the fact that an extra derivative didn’t seem that important under gold standard where long run trend inflation was roughly zero.

When I started out doing research, I bought into the claims that we were making “progress” in developing models of the macroeconomy. Over time, we might expect better and better models, capable of providing useful advice to policymakers. After the fiasco of 2008, I realized that the emperor had no clothes. Economists as a whole were not equipped with a consensus model capable of providing useful policy advice. Economists were all over the map in their policy recommendations. If we actually had been making progress, we would not have revived the tired old debates of the 1930s. Even if the quality of academic publications is higher than ever in a technical sense, the content seems less interesting than in the past. Maybe we expected too much.

More recently, high inflation has led to a revival of 1970s-era inflation models that I had assumed were long dead. You see discussion of “wage-price spirals”, of “greedflation”, or of the need for tax increases to rein in inflation. And just as in the 1920s, you have some economists advocating price level targets while other endorse NGDP targeting.

Going forward, I’d expect to see a greater role for market indicators such as financial derivatives linked to important macroeconomic variables. In other words, like most macroeconomists I see future developments as validating my current views.

So how should we think about the progress in macro over the past century? Here are a few observations:

1. Both in the 1920s and today, economists have concluded that certain types of shocks have a big impact on the business cycle. The name given to these shocks varies over time, including “demand shocks”, “nominal shocks” and “monetary shocks”, but all describe broadly similar concept. Then and now, economists believe that sticky wages and prices help to explain why these shocks have real effects. In addition, economists have always recognized that supply shocks such as wars and droughts can impact aggregate output. So there is certainly some important continuity in macroeconomics.

2. Economists have made enormous progress in developing highly technical general equilibrium models of the business cycle. But it’s not clear what we are to do with these models. Forecasting? Economists continue to be unable to forecast the business cycle. Indeed it’s not clear that there has been any progress in our business cycle forecasting ability since the 1920s. Policy implications? Today, macroeconomists tend to favor policies such as inflation/price level targeting, or NGDP targeting. Back in the 1920s, the most distinguished macroeconomists had similar views. What’s changed is that this view is now much more widely held. Back in the 1920s, many real world policymakers were skeptical of price level or NGDP targets, instead relying on the supposedly automatic stabilizing properties of the gold standard, which had been degraded by WWI.

3. The shift from a gold exchange standard to a pure fiat money regime allows a much wider range of monetary policy choices, such as different trend rates of inflation. Fiscal policy has become more important. These changes made policymakers much more ambitious, perhaps too ambitious. In my view, the greatest progress in macro is a series of “critiques” of policy recommendations during the second half of the 20th century. Friedman and Lucas provided an important critique of mid-20th century Keynesian ideas, and Krugman’s 1998 paper pointed to problems with monetarist assumptions about the effectiveness of QE at the zero lower bound.

A series of critiques sounds less impressive than a successful positive program to tame the business cycle. But I it is a mistake to discount the importance of these ideas. They have helped to steer policy in a better direction, even as many problems remain unsolved.

(1 COMMENTS) unemployment monetary policy qe fed interest rates unemployment gold

Uncategorized

Comments on February Employment Report

The headline jobs number in the February employment report was above expectations; however, December and January payrolls were revised down by 167,000 combined. The participation rate was unchanged, the employment population ratio decreased, and the …

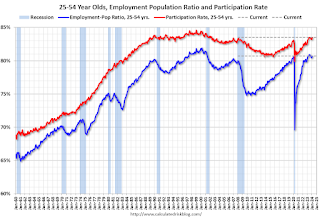

Prime (25 to 54 Years Old) Participation

Since the overall participation rate is impacted by both cyclical (recession) and demographic (aging population, younger people staying in school) reasons, here is the employment-population ratio for the key working age group: 25 to 54 years old.

The 25 to 54 years old participation rate increased in February to 83.5% from 83.3% in January, and the 25 to 54 employment population ratio increased to 80.7% from 80.6% the previous month.

Average Hourly Wages

The graph shows the nominal year-over-year change in "Average Hourly Earnings" for all private employees from the Current Employment Statistics (CES).

The graph shows the nominal year-over-year change in "Average Hourly Earnings" for all private employees from the Current Employment Statistics (CES). Wage growth has trended down after peaking at 5.9% YoY in March 2022 and was at 4.3% YoY in February.

Part Time for Economic Reasons

From the BLS report:

From the BLS report:"The number of people employed part time for economic reasons, at 4.4 million, changed little in February. These individuals, who would have preferred full-time employment, were working part time because their hours had been reduced or they were unable to find full-time jobs."The number of persons working part time for economic reasons decreased in February to 4.36 million from 4.42 million in February. This is slightly above pre-pandemic levels.

These workers are included in the alternate measure of labor underutilization (U-6) that increased to 7.3% from 7.2% in the previous month. This is down from the record high in April 2020 of 23.0% and up from the lowest level on record (seasonally adjusted) in December 2022 (6.5%). (This series started in 1994). This measure is above the 7.0% level in February 2020 (pre-pandemic).

Unemployed over 26 Weeks

This graph shows the number of workers unemployed for 27 weeks or more.

This graph shows the number of workers unemployed for 27 weeks or more. According to the BLS, there are 1.203 million workers who have been unemployed for more than 26 weeks and still want a job, down from 1.277 million the previous month.

This is close to pre-pandemic levels.

Job Streak

| Headline Jobs, Top 10 Streaks | ||

|---|---|---|

| Year Ended | Streak, Months | |

| 1 | 2019 | 100 |

| 2 | 1990 | 48 |

| 3 | 2007 | 46 |

| 4 | 1979 | 45 |

| 5 | 20241 | 38 |

| 6 tie | 1943 | 33 |

| 6 tie | 1986 | 33 |

| 6 tie | 2000 | 33 |

| 9 | 1967 | 29 |

| 10 | 1995 | 25 |

| 1Currrent Streak | ||

Summary:

The headline monthly jobs number was above consensus expectations; however, December and January payrolls were revised down by 167,000 combined. The participation rate was unchanged, the employment population ratio decreased, and the unemployment rate was increased to 3.9%. Another solid report.

Uncategorized

Immune cells can adapt to invading pathogens, deciding whether to fight now or prepare for the next battle

When faced with a threat, T cells have the decision-making flexibility to both clear out the pathogen now and ready themselves for a future encounter.

How does your immune system decide between fighting invading pathogens now or preparing to fight them in the future? Turns out, it can change its mind.

Every person has 10 million to 100 million unique T cells that have a critical job in the immune system: patrolling the body for invading pathogens or cancerous cells to eliminate. Each of these T cells has a unique receptor that allows it to recognize foreign proteins on the surface of infected or cancerous cells. When the right T cell encounters the right protein, it rapidly forms many copies of itself to destroy the offending pathogen.

Importantly, this process of proliferation gives rise to both short-lived effector T cells that shut down the immediate pathogen attack and long-lived memory T cells that provide protection against future attacks. But how do T cells decide whether to form cells that kill pathogens now or protect against future infections?

We are a team of bioengineers studying how immune cells mature. In our recently published research, we found that having multiple pathways to decide whether to kill pathogens now or prepare for future invaders boosts the immune system’s ability to effectively respond to different types of challenges.

Fight or remember?

To understand when and how T cells decide to become effector cells that kill pathogens or memory cells that prepare for future infections, we took movies of T cells dividing in response to a stimulus mimicking an encounter with a pathogen.

Specifically, we tracked the activity of a gene called T cell factor 1, or TCF1. This gene is essential for the longevity of memory cells. We found that stochastic, or probabilistic, silencing of the TCF1 gene when cells confront invading pathogens and inflammation drives an early decision between whether T cells become effector or memory cells. Exposure to higher levels of pathogens or inflammation increases the probability of forming effector cells.

Surprisingly, though, we found that some effector cells that had turned off TCF1 early on were able to turn it back on after clearing the pathogen, later becoming memory cells.

Through mathematical modeling, we determined that this flexibility in decision making among memory T cells is critical to generating the right number of cells that respond immediately and cells that prepare for the future, appropriate to the severity of the infection.

Understanding immune memory

The proper formation of persistent, long-lived T cell memory is critical to a person’s ability to fend off diseases ranging from the common cold to COVID-19 to cancer.

From a social and cognitive science perspective, flexibility allows people to adapt and respond optimally to uncertain and dynamic environments. Similarly, for immune cells responding to a pathogen, flexibility in decision making around whether to become memory cells may enable greater responsiveness to an evolving immune challenge.

Memory cells can be subclassified into different types with distinct features and roles in protective immunity. It’s possible that the pathway where memory cells diverge from effector cells early on and the pathway where memory cells form from effector cells later on give rise to particular subtypes of memory cells.

Our study focuses on T cell memory in the context of acute infections the immune system can successfully clear in days, such as cold, the flu or food poisoning. In contrast, chronic conditions such as HIV and cancer require persistent immune responses; long-lived, memory-like cells are critical for this persistence. Our team is investigating whether flexible memory decision making also applies to chronic conditions and whether we can leverage that flexibility to improve cancer immunotherapy.

Resolving uncertainty surrounding how and when memory cells form could help improve vaccine design and therapies that boost the immune system’s ability to provide long-term protection against diverse infectious diseases.

Kathleen Abadie was funded by a NSF (National Science Foundation) Graduate Research Fellowships. She performed this research in affiliation with the University of Washington Department of Bioengineering.

Elisa Clark performed her research in affiliation with the University of Washington (UW) Department of Bioengineering and was funded by a National Science Foundation Graduate Research Fellowship (NSF-GRFP) and by a predoctoral fellowship through the UW Institute for Stem Cell and Regenerative Medicine (ISCRM).

Hao Yuan Kueh receives funding from the National Institutes of Health.

stimulus covid-19 yuan vaccine stimulusUncategorized

Stock indexes are breaking records and crossing milestones – making many investors feel wealthier

The S&P 500 topped 5,000 on Feb. 9, 2024, for the first time. The Dow Jones Industrial Average will probably hit a new big round number soon t…

The S&P 500 stock index topped 5,000 for the first time on Feb. 9, 2024, exciting some investors and garnering a flurry of media coverage. The Conversation asked Alexander Kurov, a financial markets scholar, to explain what stock indexes are and to say whether this kind of milestone is a big deal or not.

What are stock indexes?

Stock indexes measure the performance of a group of stocks. When prices rise or fall overall for the shares of those companies, so do stock indexes. The number of stocks in those baskets varies, as does the system for how this mix of shares gets updated.

The Dow Jones Industrial Average, also known as the Dow, includes shares in the 30 U.S. companies with the largest market capitalization – meaning the total value of all the stock belonging to shareholders. That list currently spans companies from Apple to Walt Disney Co.

The S&P 500 tracks shares in 500 of the largest U.S. publicly traded companies.

The Nasdaq composite tracks performance of more than 2,500 stocks listed on the Nasdaq stock exchange.

The DJIA, launched on May 26, 1896, is the oldest of these three popular indexes, and it was one of the first established.

Two enterprising journalists, Charles H. Dow and Edward Jones, had created a different index tied to the railroad industry a dozen years earlier. Most of the 12 stocks the DJIA originally included wouldn’t ring many bells today, such as Chicago Gas and National Lead. But one company that only got booted in 2018 had stayed on the list for 120 years: General Electric.

The S&P 500 index was introduced in 1957 because many investors wanted an option that was more representative of the overall U.S. stock market. The Nasdaq composite was launched in 1971.

You can buy shares in an index fund that mirrors a particular index. This approach can diversify your investments and make them less prone to big losses.

Index funds, which have only existed since Vanguard Group founder John Bogle launched the first one in 1976, now hold trillions of dollars .

Why are there so many?

There are hundreds of stock indexes in the world, but only about 50 major ones.

Most of them, including the Nasdaq composite and the S&P 500, are value-weighted. That means stocks with larger market values account for a larger share of the index’s performance.

In addition to these broad-based indexes, there are many less prominent ones. Many of those emphasize a niche by tracking stocks of companies in specific industries like energy or finance.

Do these milestones matter?

Stock prices move constantly in response to corporate, economic and political news, as well as changes in investor psychology. Because company profits will typically grow gradually over time, the market usually fluctuates in the short term, while increasing in value over the long term.

The DJIA first reached 1,000 in November 1972, and it crossed the 10,000 mark on March 29, 1999. On Jan. 22, 2024, it surpassed 38,000 for the first time. Investors and the media will treat the new record set when it gets to another round number – 40,000 – as a milestone.

The S&P 500 index had never hit 5,000 before. But it had already been breaking records for several weeks.

Because there’s a lot of randomness in financial markets, the significance of round-number milestones is mostly psychological. There is no evidence they portend any further gains.

For example, the Nasdaq composite first hit 5,000 on March 10, 2000, at the end of the dot-com bubble.

The index then plunged by almost 80% by October 2002. It took 15 years – until March 3, 2015 – for it return to 5,000.

By mid-February 2024, the Nasdaq composite was nearing its prior record high of 16,057 set on Nov. 19, 2021.

Index milestones matter to the extent they pique investors’ attention and boost market sentiment.

Investors afflicted with a fear of missing out may then invest more in stocks, pushing stock prices to new highs. Chasing after stock trends may destabilize markets by moving prices away from their underlying values.

When a stock index passes a new milestone, investors become more aware of their growing portfolios. Feeling richer can lead them to spend more.

This is called the wealth effect. Many economists believe that the consumption boost that arises in response to a buoyant stock market can make the economy stronger.

Is there a best stock index to follow?

Not really. They all measure somewhat different things and have their own quirks.

For example, the S&P 500 tracks many different industries. However, because it is value-weighted, it’s heavily influenced by only seven stocks with very large market values.

Known as the “Magnificent Seven,” shares in Amazon, Apple, Alphabet, Meta, Microsoft, Nvidia and Tesla now account for over one-fourth of the S&P 500’s value. Nearly all are in the tech sector, and they played a big role in pushing the S&P across the 5,000 mark.

This makes the index more concentrated on a single sector than it appears.

But if you check out several stock indexes rather than just one, you’ll get a good sense of how the market is doing. If they’re all rising quickly or breaking records, that’s a clear sign that the market as a whole is gaining.

Sometimes the smartest thing is to not pay too much attention to any of them.

For example, after hitting record highs on Feb. 19, 2020, the S&P 500 plunged by 34% in just 23 trading days due to concerns about what COVID-19 would do to the economy. But the market rebounded, with stock indexes hitting new milestones and notching new highs by the end of that year.

Panicking in response to short-term market swings would have made investors more likely to sell off their investments in too big a hurry – a move they might have later regretted. This is why I believe advice from the immensely successful investor and fan of stock index funds Warren Buffett is worth heeding.

Buffett, whose stock-selecting prowess has made him one of the world’s 10 richest people, likes to say “Don’t watch the market closely.”

If you’re reading this because stock prices are falling and you’re wondering if you should be worried about that, consider something else Buffett has said: “The light can at any time go from green to red without pausing at yellow.”

And the opposite is true as well.

Alexander Kurov does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

dow jones sp 500 nasdaq stocks covid-19-

Uncategorized2 weeks ago

Uncategorized2 weeks agoAll Of The Elements Are In Place For An Economic Crisis Of Staggering Proportions

-

Uncategorized1 month ago

Uncategorized1 month agoCathie Wood sells a major tech stock (again)

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoCalifornia Counties Could Be Forced To Pay $300 Million To Cover COVID-Era Program

-

Uncategorized2 weeks ago

Uncategorized2 weeks agoApparel Retailer Express Moving Toward Bankruptcy

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoIndustrial Production Decreased 0.1% in January

-

Government1 month ago

Government1 month agoWar Delirium

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoRFK Jr: The Wuhan Cover-Up & The Rise Of The Biowarfare-Industrial Complex

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoGOP Efforts To Shore Up Election Security In Swing States Face Challenges