Uncategorized

What Is The Byzantine Generals Problem?

Byzantine Generals Problem: Proof of Work, The Solution

Introduction

The Byzantine Generals Problem is a game theory problem that reveals the challenges of achieving consensus among a group of mutually suspicious entities using unreliable communication channels. Game theory refers to the best strategy adopted by independent and competing actors in decision-making.

This article explores the concept of the Byzantine Generals Problem, its foundation, how it applies to networked systems and money, and how Bitcoin’s combination of its different elements enables the best-suited consensus to resolve the issue.

The Byzantine Generals Problem is particularly experienced in distributed computing, where it’s more difficult for decentralized parties to reach a consensus without relying on a trusted central party.

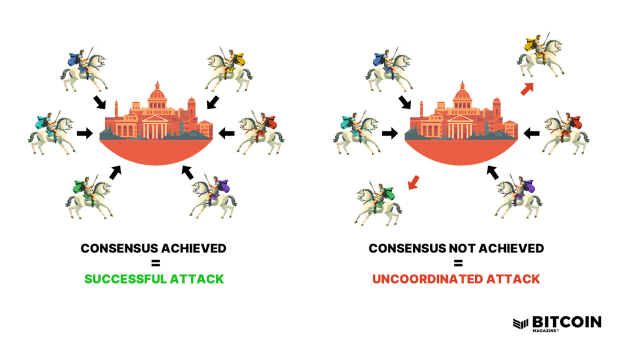

The game theory analogy is framed around a group of generals besieging Byzantium, with each general in charge of a division of the army. They must be coordinated to either attack the city or retreat; if the coordination succeeds, all generals attack simultaneously, and they will win, but if they are not coordinated, they will lose.

How can the generals coordinate to attack simultaneously if they have to rely on messengers who can be intercepted or corrupted by Byzantium’s defenders?

They must design a protocol that allows the loyal generals to reach a robust consensus to combat the dishonest Byzantine generals.

Centralized And Decentralized Systems

The Byzantine Generals Problem doesn’t occur in centralized systems, because the decisions are always taken by the central authorities involved in the organization's decision-making process.

Therefore, the challenge lies in ensuring the reliability and integrity of the communication between the authority and the subordinate entities rather than achieving consensus among multiple independent parties, as in the case of distributed systems. In such systems, the messages or commands the authority sends mustn’t be tampered with or maliciously altered during transmission.

The Byzantine Generals Problem is only common to decentralized systems, where reaching an agreement is more challenging. The network must be designed with secure communication channels so that no messaging service is intercepted or interrupted to prevent the success of an attack.

Historical Background

The Byzantine Empire's Influence

While the term "Byzantine Generals Problem" isn’t directly connected to the historical Byzantine Empire, some parallels and potential influences may have led to the concept's origin.

The Byzantine Fault Tolerance, referred to in distributed computing, implies the ability of a system to tolerate faulty or malicious components. In this context, the term "Byzantine" is inspired by the Byzantine Empire's historical challenges of coordinating actions and communication among its generals, some of whom could be traitorous or unreliable.

Furthermore, the Byzantine Empire had a highly hierarchical structure with decentralized decision-making, where various generals and commanders were responsible for leading their respective armies. Similarly, nodes or entities in distributed systems may have independent decision-making capabilities, and achieving consensus among them poses challenges comparable to coordinating actions among multiple Byzantine generals.

The parallel with the Byzantine Empire in the Byzantine Generals Problem provides a symbolic framework for understanding the difficulties encountered in achieving consensus and fault tolerance in distributed systems. The complex decision-making dynamics and potential for malicious behavior seen in historical Byzantine military campaigns represent the challenges which distributed computing faces.

Origin Of The Byzantine Generals Problem

The term Byzantine Generals Problem was first introduced by computer scientists Leslie Lamport, Robert Shostak, and Marshall Pease in a paper published in 1982.

The National Aeronautics and Space Administration, the Ballistic Missile Defense Systems Command, and the Army Research Office partly supported the research paper. Such funding emphasizes the importance of this issue and that the concept can be applied to military communication, other than all types of computer systems.

In modern computing, the Byzantine Generals Problem must be solved if a dispersed group of nodes (e.g., computers or other physical devices) needs to achieve reliable communications.

Analogy To Modern Computing

The Byzantine Generals Problem mainly affects distributed computing because achieving consensus in a network where nodes can be faulty or malicious is challenging. It has applications in various areas, including fault-tolerant systems, distributed databases, and blockchain technology. The problem has urged the development of Byzantine fault-tolerant consensus protocols and algorithms, which are crucial in ensuring the reliability and consistency of distributed systems.

In blockchain systems, the Byzantine Generals Problem is addressed in consensus protocols like proof of work (PoW) to reach an agreement among multiple nodes in a trustless environment. Byzantine fault tolerance represents an essential characteristic of decentralized blockchain networks.

In cybersecurity and intrusion detection, the Byzantine Generals Problem analogy helps understand the challenges of securing computer networks against malicious actors who may attempt to disrupt communication, tamper with data or launch attacks, and helps identify and mitigate such potential threats.

The Byzantine Generals Problem finds application in internet of things (IoT) networks, where numerous devices must communicate and cooperate to perform tasks. Ensuring consensus and coordination among IoT devices, especially in the presence of unreliable or compromised nodes, is crucial for maintaining the integrity and security of IoT systems.

The Byzantine Generals Problem is also essential in cloud computing to ensure reliability and fault tolerance in distributed cloud environments. Byzantine fault-tolerant protocols can handle faults and malicious behaviors within cloud computing systems.

Popular Byzantine Fault-Tolerance Algorithms

To ensure that a tiny group of malicious actors cannot disrupt a distributed system, an algorithm is required to provide the solution. Several algorithms, such as Byzantine fault-tolerant consensus protocols, have been developed to allow reliable distributed computing to deal with Byzantine failures.

Practical Byzantine Fault Tolerance (PBFT):

Practical Byzantine Fault Tolerance (PBFT) is a consensus algorithm in distributed systems that tolerates up to one-third of the total number of Byzantine nodes, meaning they can exhibit arbitrary and potentially malicious behavior without affecting the network.

The algorithm ensures that the system reaches agreement on the order of requests in the shortest time possible and maintains consistency even in Byzantine failures. Using a combination of digital signatures, timeouts, and acknowledgments, PBFT ensures that the consensus process progresses even if some nodes are faulty or malicious and that the system can continue to progress as long as the majority of nodes are honest.

Federated Byzantine Agreement (FBA):

Federated Byzantine Agreement (FBA) is another consensus algorithm in distributed systems designed for a decentralized network of nodes that can reach consensus without relying on a centralized authority.

FBA is based on federating independent nodes into groups (federations). Each federation consists of a set of nodes that mutually trust each other. The algorithm ensures that nodes within a federation agree on the ordering and validity of transactions or events while allowing different federations to have separate consensus processes. Fedimint is the most known federation and open-source protocol to transact and custody bitcoin, and it uses the honey badger Byzantine fault-tolerant (HBBFT) consensus algorithm.

Bitcoin's Solution: Proof-Of-Work:

While Bitcoin’s proof of work (PoW) consensus mechanism is not technically a Byzantine fault-tolerant algorithm, it is nonetheless used to make Bitcoin Byzantine fault tolerant. Network nodes cannot declare a block valid unless it contains a proof-of-work hash, indicating that work was done to produce it.

Byzantine fault tolerance requires tolerating a certain number of faulty or malicious nodes in the network while reaching a consensus. Bitcoin's PoW consensus mechanism offers probabilistic finality, meaning that the longer the blockchain becomes, the more difficult it becomes for an adversary to perform an attack and rewrite or alter the history.

Comparison Of BFT Algorithms:

Several Byzantine fault-tolerant (BFT) algorithms are available, each with its own characteristics, trade-offs, and suitability for different use cases.

The choice of a BFT algorithm depends on factors such as performance, fault tolerance, scalability, transaction finality, network characteristics, and trust assumptions that suit the different blockchains, from permissioned or permissionless networks to distributed databases or file systems.

Byzantine Generals Problem In Computer Networks

There are several reasons why a distributed computer system could have unavoidable Byzantine failures, and they are not always coordinated malicious attacks. From a software defect to a hardware malfunction, nodes may present different difficulties that prevent them from reaching a consensus on distributed networks.

Reasonably secure networks can withstand a few offline or malicious nodes without affecting the whole network, and Byzantine fault tolerance is the ability to handle such conditions. In

2008 Bitcoin’s white paper proposed a solution to computer science’s Byzantine Generals Problem:

“A purely peer-to-peer version of electronic cash would allow online payments to be sent directly from one party to another without going through a financial institution,” stated Satoshi Nakamoto in the paper.

He created a global monetary system that could be trustless for the first time in history, programmed to disincentivize bad behavior and encourage nodes and miners to act honestly instead.

Money And The Byzantine Generals Problem

The Byzantine Generals Problem analogy can be extended to money and financial transactions, particularly in the context of decentralized digital currencies like bitcoin.

How can financial transactions be executed in a secure and trustless environment without the need for a central authority to verify and finalize the transfer of value, even in the presence of Byzantine behavior?

To solve the Byzantine Generals Problem, money must be completely trustless. Therefore; it should be verifiable, secure, transparent, decentralized and counterfeit resistant. Bitcoin was designed with these attributes serving as its fundamental principles resulting in a breakthrough solution to the Byzantine Generals Problem.

Bitcoin’s Solution

- Blockchain Solves The Double-Spend Problem

Bitcoin handles users’ ownership and prevents double spending in a trustless manner using a blockchain, the public and distributed ledger, which stores a history of all transactions and the truth that all parties (the nodes) must approve to resolve the Byzantine Generals Problem.

Double spending refers to the possibility of spending the same digital currency unit more than once, which would undermine the integrity and value of the currency.

Such a network of nodes is necessary to verify bitcoin ownership through consensus mechanisms and cryptographic techniques (such as digital signatures) solving the double-spending problem without a central authority.

Byzantine fault-tolerant consensus protocols in blockchain systems help prevent double spending by establishing agreement on the order and validity of transactions. Trust is placed in the underlying protocol and network consensus rather than a central intermediary.

- Proof-Of-Work Solves The Byzantine Generals Problem

Proof of work requires a lot of energy, labor, and expense to produce a new block. This proof of computational work helps to secure the network against sybil attacks by ensuring that adding new blocks to the chain is resource-intensive and costly.

Network members who publish false information will be immediately detected by all nodes who recognize it as invalid and ignore it. Bitcoin is a trustless system since every node can verify all information on the network without needing to trust other network members.

Conclusion

As society moves increasingly toward distributed systems and adopts decentralized money like bitcoin, the Byzantine Generals Problem approach becomes essential to coordinate the actions of multiple independent parties without relying on central authorities.

To be successful, systems must ensure Byzantine fault tolerance and guarantee resilience and security — even in the presence of flawed information — so that a consensus can be reached despite the possibility of deception and betrayal from some parties.

Bitcoin is ideally suited to provide a trustless environment to handle attacks. Its proof-of-work algorithm has protected the network’s security by fostering competition among miners, making it computationally infeasible for any single entity to control the network. This decentralized nature of Bitcoin, underpinned by Byzantine fault tolerance, showcases a robust model for ensuring consensus and security amidst potential misinformation and malicious intents.

bitcoin blockchain currenciesUncategorized

February Employment Situation

By Paul Gomme and Peter Rupert The establishment data from the BLS showed a 275,000 increase in payroll employment for February, outpacing the 230,000…

By Paul Gomme and Peter Rupert

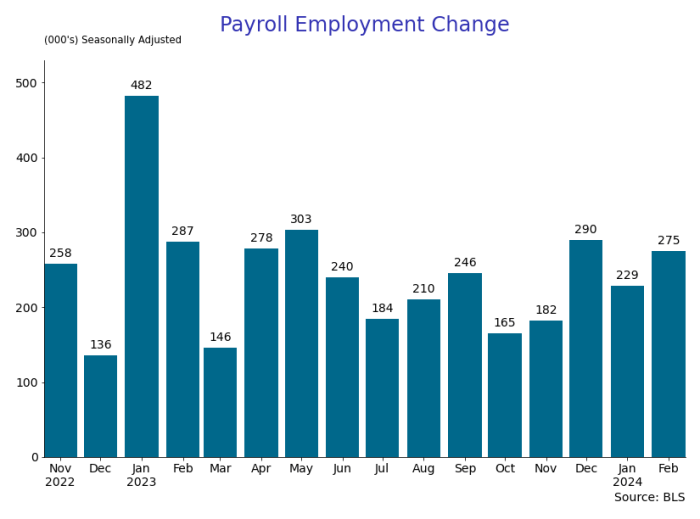

The establishment data from the BLS showed a 275,000 increase in payroll employment for February, outpacing the 230,000 average over the previous 12 months. The payroll data for January and December were revised down by a total of 167,000. The private sector added 223,000 new jobs, the largest gain since May of last year.

Temporary help services employment continues a steep decline after a sharp post-pandemic rise.

Average hours of work increased from 34.2 to 34.3. The increase, along with the 223,000 private employment increase led to a hefty increase in total hours of 5.6% at an annualized rate, also the largest increase since May of last year.

The establishment report, once again, beat “expectations;” the WSJ survey of economists was 198,000. Other than the downward revisions, mentioned above, another bit of negative news was a smallish increase in wage growth, from $34.52 to $34.57.

The household survey shows that the labor force increased 150,000, a drop in employment of 184,000 and an increase in the number of unemployed persons of 334,000. The labor force participation rate held steady at 62.5, the employment to population ratio decreased from 60.2 to 60.1 and the unemployment rate increased from 3.66 to 3.86. Remember that the unemployment rate is the number of unemployed relative to the labor force (the number employed plus the number unemployed). Consequently, the unemployment rate can go up if the number of unemployed rises holding fixed the labor force, or if the labor force shrinks holding the number unemployed unchanged. An increase in the unemployment rate is not necessarily a bad thing: it may reflect a strong labor market drawing “marginally attached” individuals from outside the labor force. Indeed, there was a 96,000 decline in those workers.

Earlier in the week, the BLS announced JOLTS (Job Openings and Labor Turnover Survey) data for January. There isn’t much to report here as the job openings changed little at 8.9 million, the number of hires and total separations were little changed at 5.7 million and 5.3 million, respectively.

As has been the case for the last couple of years, the number of job openings remains higher than the number of unemployed persons.

Also earlier in the week the BLS announced that productivity increased 3.2% in the 4th quarter with output rising 3.5% and hours of work rising 0.3%.

The bottom line is that the labor market continues its surprisingly (to some) strong performance, once again proving stronger than many had expected. This strength makes it difficult to justify any interest rate cuts soon, particularly given the recent inflation spike.

unemployment pandemic unemploymentUncategorized

Mortgage rates fall as labor market normalizes

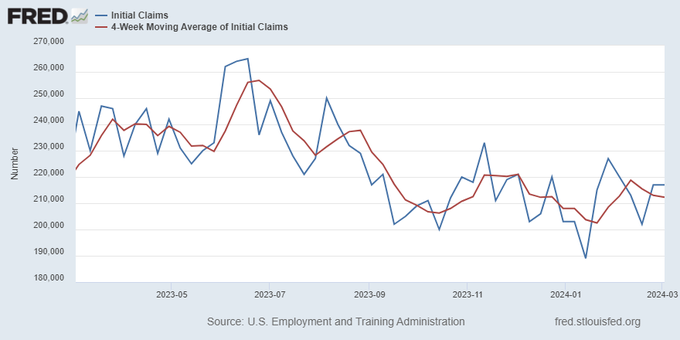

Jobless claims show an expanding economy. We will only be in a recession once jobless claims exceed 323,000 on a four-week moving average.

Everyone was waiting to see if this week’s jobs report would send mortgage rates higher, which is what happened last month. Instead, the 10-year yield had a muted response after the headline number beat estimates, but we have negative job revisions from previous months. The Federal Reserve’s fear of wage growth spiraling out of control hasn’t materialized for over two years now and the unemployment rate ticked up to 3.9%. For now, we can say the labor market isn’t tight anymore, but it’s also not breaking.

The key labor data line in this expansion is the weekly jobless claims report. Jobless claims show an expanding economy that has not lost jobs yet. We will only be in a recession once jobless claims exceed 323,000 on a four-week moving average.

From the Fed: In the week ended March 2, initial claims for unemployment insurance benefits were flat, at 217,000. The four-week moving average declined slightly by 750, to 212,250

Below is an explanation of how we got here with the labor market, which all started during COVID-19.

1. I wrote the COVID-19 recovery model on April 7, 2020, and retired it on Dec. 9, 2020. By that time, the upfront recovery phase was done, and I needed to model out when we would get the jobs lost back.

2. Early in the labor market recovery, when we saw weaker job reports, I doubled and tripled down on my assertion that job openings would get to 10 million in this recovery. Job openings rose as high as to 12 million and are currently over 9 million. Even with the massive miss on a job report in May 2021, I didn’t waver.

Currently, the jobs openings, quit percentage and hires data are below pre-COVID-19 levels, which means the labor market isn’t as tight as it once was, and this is why the employment cost index has been slowing data to move along the quits percentage.

3. I wrote that we should get back all the jobs lost to COVID-19 by September of 2022. At the time this would be a speedy labor market recovery, and it happened on schedule, too

Total employment data

4. This is the key one for right now: If COVID-19 hadn’t happened, we would have between 157 million and 159 million jobs today, which would have been in line with the job growth rate in February 2020. Today, we are at 157,808,000. This is important because job growth should be cooling down now. We are more in line with where the labor market should be when averaging 140K-165K monthly. So for now, the fact that we aren’t trending between 140K-165K means we still have a bit more recovery kick left before we get down to those levels.

From BLS: Total nonfarm payroll employment rose by 275,000 in February, and the unemployment rate increased to 3.9 percent, the U.S. Bureau of Labor Statistics reported today. Job gains occurred in health care, in government, in food services and drinking places, in social assistance, and in transportation and warehousing.

Here are the jobs that were created and lost in the previous month:

In this jobs report, the unemployment rate for education levels looks like this:

- Less than a high school diploma: 6.1%

- High school graduate and no college: 4.2%

- Some college or associate degree: 3.1%

- Bachelor’s degree or higher: 2.2%

Today’s report has continued the trend of the labor data beating my expectations, only because I am looking for the jobs data to slow down to a level of 140K-165K, which hasn’t happened yet. I wouldn’t categorize the labor market as being tight anymore because of the quits ratio and the hires data in the job openings report. This also shows itself in the employment cost index as well. These are key data lines for the Fed and the reason we are going to see three rate cuts this year.

recession unemployment covid-19 fed federal reserve mortgage rates recession recovery unemploymentUncategorized

Inside The Most Ridiculous Jobs Report In History: Record 1.2 Million Immigrant Jobs Added In One Month

Inside The Most Ridiculous Jobs Report In History: Record 1.2 Million Immigrant Jobs Added In One Month

Last month we though that the January…

Last month we though that the January jobs report was the "most ridiculous in recent history" but, boy, were we wrong because this morning the Biden department of goalseeked propaganda (aka BLS) published the February jobs report, and holy crap was that something else. Even Goebbels would blush.

What happened? Let's take a closer look.

On the surface, it was (almost) another blockbuster jobs report, certainly one which nobody expected, or rather just one bank out of 76 expected. Starting at the top, the BLS reported that in February the US unexpectedly added 275K jobs, with just one research analyst (from Dai-Ichi Research) expecting a higher number.

Some context: after last month's record 4-sigma beat, today's print was "only" 3 sigma higher than estimates. Needless to say, two multiple sigma beats in a row used to only happen in the USSR... and now in the US, apparently.

Before we go any further, a quick note on what last month we said was "the most ridiculous jobs report in recent history": it appears the BLS read our comments and decided to stop beclowing itself. It did that by slashing last month's ridiculous print by over a third, and revising what was originally reported as a massive 353K beat to just 229K, a 124K revision, which was the biggest one-month negative revision in two years!

Of course, that does not mean that this month's jobs print won't be revised lower: it will be, and not just that month but every other month until the November election because that's the only tool left in the Biden admin's box: pretend the economic and jobs are strong, then revise them sharply lower the next month, something we pointed out first last summer and which has not failed to disappoint once.

In the past month the Biden department of goalseeking stuff higher before revising it lower, has revised the following data sharply lower:

— zerohedge (@zerohedge) August 30, 2023

- Jobs

- JOLTS

- New Home sales

- Housing Starts and Permits

- Industrial Production

- PCE and core PCE

To be fair, not every aspect of the jobs report was stellar (after all, the BLS had to give it some vague credibility). Take the unemployment rate, after flatlining between 3.4% and 3.8% for two years - and thus denying expectations from Sahm's Rule that a recession may have already started - in February the unemployment rate unexpectedly jumped to 3.9%, the highest since February 2022 (with Black unemployment spiking by 0.3% to 5.6%, an indicator which the Biden admin will quickly slam as widespread economic racism or something).

And then there were average hourly earnings, which after surging 0.6% MoM in January (since revised to 0.5%) and spooking markets that wage growth is so hot, the Fed will have no choice but to delay cuts, in February the number tumbled to just 0.1%, the lowest in two years...

... for one simple reason: last month's average wage surge had nothing to do with actual wages, and everything to do with the BLS estimate of hours worked (which is the denominator in the average wage calculation) which last month tumbled to just 34.1 (we were led to believe) the lowest since the covid pandemic...

... but has since been revised higher while the February print rose even more, to 34.3, hence why the latest average wage data was once again a product not of wages going up, but of how long Americans worked in any weekly period, in this case higher from 34.1 to 34.3, an increase which has a major impact on the average calculation.

While the above data points were examples of some latent weakness in the latest report, perhaps meant to give it a sheen of veracity, it was everything else in the report that was a problem starting with the BLS's latest choice of seasonal adjustments (after last month's wholesale revision), which have gone from merely laughable to full clownshow, as the following comparison between the monthly change in BLS and ADP payrolls shows. The trend is clear: the Biden admin numbers are now clearly rising even as the impartial ADP (which directly logs employment numbers at the company level and is far more accurate), shows an accelerating slowdown.

But it's more than just the Biden admin hanging its "success" on seasonal adjustments: when one digs deeper inside the jobs report, all sorts of ugly things emerge... such as the growing unprecedented divergence between the Establishment (payrolls) survey and much more accurate Household (actual employment) survey. To wit, while in January the BLS claims 275K payrolls were added, the Household survey found that the number of actually employed workers dropped for the third straight month (and 4 in the past 5), this time by 184K (from 161.152K to 160.968K).

This means that while the Payrolls series hits new all time highs every month since December 2020 (when according to the BLS the US had its last month of payrolls losses), the level of Employment has not budged in the past year. Worse, as shown in the chart below, such a gaping divergence has opened between the two series in the past 4 years, that the number of Employed workers would need to soar by 9 million (!) to catch up to what Payrolls claims is the employment situation.

There's more: shifting from a quantitative to a qualitative assessment, reveals just how ugly the composition of "new jobs" has been. Consider this: the BLS reports that in February 2024, the US had 132.9 million full-time jobs and 27.9 million part-time jobs. Well, that's great... until you look back one year and find that in February 2023 the US had 133.2 million full-time jobs, or more than it does one year later! And yes, all the job growth since then has been in part-time jobs, which have increased by 921K since February 2023 (from 27.020 million to 27.941 million).

Here is a summary of the labor composition in the past year: all the new jobs have been part-time jobs!

But wait there's even more, because now that the primary season is over and we enter the heart of election season and political talking points will be thrown around left and right, especially in the context of the immigration crisis created intentionally by the Biden administration which is hoping to import millions of new Democratic voters (maybe the US can hold the presidential election in Honduras or Guatemala, after all it is their citizens that will be illegally casting the key votes in November), what we find is that in February, the number of native-born workers tumbled again, sliding by a massive 560K to just 129.807 million. Add to this the December data, and we get a near-record 2.4 million plunge in native-born workers in just the past 3 months (only the covid crash was worse)!

The offset? A record 1.2 million foreign-born (read immigrants, both legal and illegal but mostly illegal) workers added in February!

Said otherwise, not only has all job creation in the past 6 years has been exclusively for foreign-born workers...

... but there has been zero job-creation for native born workers since June 2018!

This is a huge issue - especially at a time of an illegal alien flood at the southwest border...

... and is about to become a huge political scandal, because once the inevitable recession finally hits, there will be millions of furious unemployed Americans demanding a more accurate explanation for what happened - i.e., the illegal immigration floodgates that were opened by the Biden admin.

Which is also why Biden's handlers will do everything in their power to insure there is no official recession before November... and why after the election is over, all economic hell will finally break loose. Until then, however, expect the jobs numbers to get even more ridiculous.

-

Uncategorized2 weeks ago

Uncategorized2 weeks agoAll Of The Elements Are In Place For An Economic Crisis Of Staggering Proportions

-

Uncategorized1 month ago

Uncategorized1 month agoCathie Wood sells a major tech stock (again)

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoCalifornia Counties Could Be Forced To Pay $300 Million To Cover COVID-Era Program

-

Uncategorized2 weeks ago

Uncategorized2 weeks agoApparel Retailer Express Moving Toward Bankruptcy

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoIndustrial Production Decreased 0.1% in January

-

International2 days ago

International2 days agoWalmart launches clever answer to Target’s new membership program

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoRFK Jr: The Wuhan Cover-Up & The Rise Of The Biowarfare-Industrial Complex

-

International2 days ago

International2 days agoEyePoint poaches medical chief from Apellis; Sandoz CFO, longtime BioNTech exec to retire