International

Noise in the brain enables us to make extraordinary leaps of imagination. It could transform the power of computers too

From more accurate climate modelling to the prospect of truly creative computers, the brain’s use of noise has a lot to teach us.

We all have to make hard decisions from time to time. The hardest of my life was whether or not to change research fields after my PhD, from fundamental physics to climate physics. I had job offers that could have taken me in either direction – one to join Stephen Hawking’s Relativity and Gravitation Group at Cambridge University, another to join the Met Office as a scientific civil servant.

I wrote down the pros and cons of both options as one is supposed to do, but then couldn’t make up my mind at all. Like Buridan’s donkey, I was unable to move to either the bale of hay or the pail of water. It was a classic case of paralysis by analysis.

Since it was doing my head in, I decided to try to forget about the problem for a couple of weeks and get on with my life. In that intervening time, my unconscious brain decided for me. I simply walked into my office one day and the answer had somehow become obvious: I would make the change to studying the weather and climate.

More than four decades on, I’d make the same decision again. My fulfilling career has included developing a new, probabilistic way of forecasting weather and climate which is helping humanitarian and disaster relief agencies make better decisions ahead of extreme weather events. (This and many other aspects are described in my new book, The Primacy of Doubt.)

But I remain fascinated by what was going on in my head back then, which led my subconscious to make a life-changing decision that my conscious could not. Is there something to be understood here not only about how to make difficult decisions, but about how humans make the leaps of imagination that characterise us as such a creative species? I believe the answer to both questions lies in a better understanding of the extraordinary power of noise.

Imprecise supercomputers

I went from the pencil-and-paper mathematics of Einstein’s theory of general relativity to running complex climate models on some of the world’s biggest supercomputers. Yet big as they were, they were never big enough – the real climate system is, after all, very complex.

In the early days of my research, one only had to wait a couple of years and top-of-the-range supercomputers would get twice as powerful. This was the era where transistors were getting smaller and smaller, allowing more to be crammed on to each microchip. The consequent doubling of computer performance for the same power every couple of years was known as Moore’s Law.

This story is part of Conversation Insights

The Insights team generates long-form journalism and is working with academics from different backgrounds who have been engaged in projects to tackle societal and scientific challenges.

There is, however, only so much miniaturisation you can do before the transistor starts becoming unreliable in its key role as an on-off switch. Today, with transistors starting to approach atomic size, we have pretty much reached the limit of Moore’s Law. To achieve more number-crunching capability, computer manufacturers must bolt together more and more computing cabinets, each one crammed full of chips.

But there’s a problem. Increasing number-crunching capability this way requires a lot more electric power – modern supercomputers the size of tennis courts consume tens of megawatts. I find it something of an embarrassment that we need so much energy to try to accurately predict the effects of climate change.

That’s why I became interested in how to construct a more accurate climate model without consuming more energy. And at the heart of this is an idea that sounds counterintuitive: by adding random numbers, or “noise”, to a climate model, we can actually make it more accurate in predicting the weather.

A constructive role for noise

Noise is usually seen as a nuisance – something to be minimised wherever possible. In telecommunications, we speak about trying to maximise the “signal-to-noise ratio” by boosting the signal or reducing the background noise as much as possible. However, in nonlinear systems, noise can be your friend and actually contribute to boosting a signal. (A nonlinear system is one whose output does not vary in direct proportion to the input. You will likely be very happy to win £100 million on the lottery, but probably not twice as happy to win £200 million.)

Noise can, for example, help us find the maximum value of a complicated curve such as in Figure 1, below. There are many situations in the physical, biological and social sciences as well as in engineering where we might need to find such a maximum. In my field of meteorology, the process of finding the best initial conditions for a global weather forecast involves identifying the maximum point of a very complicated meteorological function.

Figure 1

However, employing a “deterministic algorithm” to locate the global maximum doesn’t usually work. This type of algorithm will typically get stuck at a local peak (for example at point a) because the curve moves downwards in both directions from there.

An answer is to use a technique called “simulated annealing” – so called because of its similarities with (annealing), the heat treatment process that changes the properties of metals. Simulated annealing, which employs noise to get round the issue of getting stuck at local peaks, has been used to solve many problems including the classic travelling salesman puzzle of finding the shortest path between a large number of cities on a map.

Figure 1 shows a possible route to locating the curve’s global maximum (point 9) by using the following criteria:

If a randomly chosen point is higher than the current position on the curve, then the new point is always moved to.

If it is lower than the current position, the suggested point isn’t necessarily rejected. It depends whether the new point is a lot lower or just a little lower.

However, the decision to move to a new point also depends on how long the analysis has been running. Whereas in the early stages, random points quite a bit lower than the current position may be accepted, in later stages only those that are higher or just a tiny bit lower are accepted.

The technique is known as simulated annealing because early on – like hot metal in the early phase of cooling – the system is pliable and changeable. Later in the process – like cold metal in the late phase of cooling – it is almost rigid and unchangeable.

How noise can help climate models

Noise was introduced into comprehensive weather and climate models around 20 years ago. A key reason was to represent model uncertainty in our ensemble weather forecasts – but it turned out that adding noise also reduced some of the biases the models had, making them more accurate simulators of weather and climate.

Unfortunately, these models require huge supercomputers and a lot of energy to run them. They divide the world into small gridboxes, with the atmosphere and ocean within each assumed to be constant – which, of course, it isn’t. The horizontal scale of a typical gridbox is around 100km – so one way of making a model more accurate is to reduce this distance to 50km, or 10km or 1km. However, halving the volume of a gridbox increases the computational cost of running the model by up to a factor of 16, meaning it consumes a lot more energy.

Here again, noise offered an appealing alternative. The proposal was to use it to represent the unpredictable (and unmodellable) variations in small-scale climatic processes like turbulence, cloud systems, ocean eddies and so on. I argued that adding noise could be a way of boosting accuracy without having to incur the enormous computational cost of reducing the size of the gridboxes. For example, as has now been verified, adding noise to a climate model increases the likelihood of producing extreme hurricanes – reflecting the potential reality of a world whose weather is growing more extreme due to climate change.

The computer hardware we use for this modelling is inherently noisy – electrons travelling along wires in a computer move in partly random ways due to its warm environment. Such randomness is called “thermal noise”. Could we save even more energy by tapping into it, rather than having to use software to generate pseudo-random numbers? To me, low-energy “imprecise” supercomputers that are inherently noisy looked like a win-win proposal.

But not all of my colleagues were convinced. They were uncomfortable that computers might not give the same answers from one day to the next. To try to persuade them, I began to think about other real-world systems that, because of limited energy availability, also use noise that is generated within their hardware. And I stumbled on the human brain.

Noise in the brain

Every second of the waking day, our eyes alone send gigabytes of data to the brain. That’s not much different to the amount of data a climate model produces each time it outputs data to memory.

The brain has to process this data and somehow make sense of it. If it did this using the power of a supercomputer, that would be impressive enough. But it does it using one millionth of that power, about 20W instead of 20MW – what it takes to power a lightbulb. Such energy efficiency is mind-bogglingly impressive. How on Earth does the brain do it?

An adult brain contains some 80 billion neurons. Each neuron has a long slender biological cable – the axon – along which electrical impulses are transmitted from one set of neurons to the next. But these impulses, which collectively describe information in the brain, have to be boosted by protein “transistors” positioned at regular intervals along the axons. Without them, the signal would dissipate and be lost.

The energy for these boosts ultimately comes from an organic compound in the blood called ATP (adenosine triphosphate). This enables electrically charged atoms of sodium and potassium (ions) to be pushed through small channels in the neuron walls, creating electrical voltages which, much like those in silicon transistors, amplify the neuronal electric signals as they travel along the axons.

With 20W of power spread across tens of billions of neurons, the voltages involved are tiny, as are the axon cables. And there is evidence that axons with a diameter less than about 1 micron (which most in the brain are) are susceptible to noise. In other words, the brain is a noisy system.

If this noise simply created unhelpful “brain fog”, one might wonder why we evolved to have so many slender axons in our heads. Indeed, there are benefits to having fatter axons: the signals propagate along them faster. If we still needed fast reaction times to escape predators, then slender axons would be disadvantageous. However, developing communal ways of defending ourselves against enemies may have reduced the need for fast reaction times, leading to an evolutionary trend towards thinner axons.

Perhaps, serendipitously, evolutionary mutations that further increased neuron numbers and reduced axon sizes, keeping overall energy consumption the same, made the brain’s neurons more susceptible to noise. And there is mounting evidence that this had another remarkable effect: it encouraged in humans the ability to solve problems that required leaps in imagination and creativity.

Perhaps we only truly became Homo Sapiens when significant noise began to appear in our brains?

Putting noise in the brain to good use

Many animals have developed creative approaches to solving problems, but there is nothing to compare with a Shakespeare, a Bach or an Einstein in the animal world.

How do creative geniuses come up with their ideas? Here’s a quote from Andrew Wiles, perhaps the most famous mathematician alive today, about the time leading up to his celebrated proof of the maths problem (misleadingly) known as Fermat’s Last Theorem:

When you reach a real impasse, then routine mathematical thinking is of no use to you. Leading up to that kind of new idea, there has to be a long period of tremendous focus on the problem without any distraction. You have to really think about nothing but that problem – just concentrate on it. And then you stop. [At this point] there seems to be a period of relaxation during which the subconscious appears to take over – and it’s during this time that some new insight comes.

This notion seems universal. Physics Nobel Laureate Roger Penrose has spoken about his “Eureka moment” when crossing a busy street with a colleague (perhaps reflecting on their conversation while also looking out for oncoming traffic). For the father of chaos theory Henri Poincaré, it was catching a bus.

And it’s not just creativity in mathematics and physics. Comedian John Cleese, of Monty Python fame, makes much the same point about artistic creativity – it occurs not when you are focusing hard on your trade, but when you relax and let your unconscious mind wander.

Of course, not all the ideas that bubble up from your subconscious are going to be Eureka moments. Physicist Michael Berry talks about these subconscious ideas as if they are elementary particles called “claritons”:

Actually, I do have a contribution to particle physics … the elementary particle of sudden understanding: the “clariton”. Any scientist will recognise the “aha!” moment when this particle is created. But there is a problem: all too frequently, today’s clariton is annihilated by tomorrow’s “anticlariton”. So many of our scribblings disappear beneath a rubble of anticlaritons.

Here is something we can all relate to: that in the cold light of day, most of our “brilliant” subconscious ideas get annihilated by logical thinking. Only a very, very, very small number of claritons remain after this process. But the ones that do are likely to be gems.

In his renowned book Thinking Fast and Slow, the Nobel prize-winning psychologist Daniel Kahneman describes the brain in a binary way. Most of the time when walking, chatting and looking around (in other words when multitasking), it operates in a mode Kahneman calls “system 1” – a rather fast, automatic, effortless mode of operation.

By contrast, when we are thinking hard about a specific problem (unitasking), the brain is in the slower, more deliberative and logical “system 2”. To perform a calculation like 37x13, we have to stop walking, stop talking, close our eyes and even put our hands over our ears. No chance for significant multitasking in system 2.

My 2015 paper with computational neuroscientist Michael O’Shea interpreted system 1 as a mode where available energy is spread across a large number of active neurons, and system 2 as where energy is focused on a smaller number of active neurons. The amount of energy per active neuron is therefore much smaller when in the system 1 mode, and it would seem plausible that the brain is more susceptible to noise when in this state. That is, in situations when we are multitasking, the operation of any one of the neurons will be most susceptible to the effects of noise in the brain.

Read more: Daniel Kahneman on 'noise' – the flaw in human judgement harder to detect than cognitive bias

Berry’s picture of clariton-anticlariton interaction seems to suggest a model of the brain where the noisy system 1 and the deterministic system 2 act in synergy. The anticlariton is the logical analysis that we perform in system 2 which, most of the time, leads us to reject our crazy system 1 ideas.

But sometimes one of these ideas turns out to be not so crazy.

This is reminiscent of how our simulated annealing analysis (Figure 1) works. Initially, we might find many “crazy” ideas appealing. But as we get closer to locating the optimal solution, the criteria for accepting a new suggestion becomes more stringent and discerning. Now, system 2 anticlaritons are annihilating almost everything the system 1 claritons can throw at them – but not quite everything, as Wiles found to his great relief.

The key to creativity

If the key to creativity is the synergy between noisy and deterministic thinking, what are some consequences of this?

On the one hand, if you do not have the necessary background information then your analytic powers will be depleted. That’s why Wiles says that leading up to the moment of insight, you have to immerse yourself in your subject. You aren’t going to have brilliant ideas which will revolutionise quantum physics unless you have a pretty good grasp of quantum physics in the first place.

But you also need to leave yourself enough time each day to do nothing much at all, to relax and let your mind wander. I tell my research students that if they want to be successful in their careers, they shouldn’t spend every waking hour in front of their laptop or desktop. And swapping it for social media probably doesn’t help either, since you still aren’t really multitasking – each moment you are on social media, your attention is still fixed on a specific issue.

But going for a walk or bike ride or painting a shed probably does help. Personally, I find that driving a car is a useful activity for coming up with new ideas and thoughts – provided you don’t turn the radio on.

When making difficult decisions, this suggests that, having listed all the pros and cons, it can be helpful not to actively think about the problem for a while. I think this explains how, years ago, I finally made the decision to change my research direction – not that I knew it at the time.

Because the brain’s system 1 is so energy efficient, we use it to make the vast majority of the many decisions in our daily lives (some say as many as 35,000) – most of which aren’t that important, like whether to continue putting one leg in front of the other as we walk down to the shops. (I could alternatively stop after each step, survey my surroundings to make sure a predator was not going to jump out and attack me, and on that basis decide whether to take the next step.)

However, this system 1 thinking can sometimes lead us to make bad decisions, because we have simply defaulted to this low-energy mode and not engaged system 2 when we should have. How many times do we say to ourselves in hindsight: “Why didn’t I give such and such a decision more thought?”

Of course, if instead we engaged system 2 for every decision we had to make, then we wouldn’t have enough time or energy to do all the other important things we have to do in our daily lives (so the shops may have shut by the time we reach them).

From this point of view, we should not view giving wrong answers to unimportant questions as evidence of irrationality. Kahneman cites the fact that more than 50% of students at MIT, Harvard and Princeton gave the incorrect answer to this simple question – a bat and ball costs $1.10; the bat costs one dollar more than the ball; how much does the ball cost? – as evidence of our irrationality. The correct answer, if you think about it, is 5 cents. But system 1 screams out ten cents.

If we were asked this question on pain of death, one would hope we would spend enough thought to come up with the correct answer. But if we were asked the question as part of an anonymous after-class test, when we had much more important things to spend time and energy doing, then I’d be inclined to think of it as irrational to give the right answer.

Read more: Curious Kids: how does our brain know to make immediate decisions?

If we had 20MW to run the brain, we could spend part of it solving unimportant problems. But we only have 20W and we need to use it carefully. Perhaps it’s the 50% of MIT, Harvard and Princeton students who gave the wrong answer who are really the clever ones.

Just as a climate model with noise can produce types of weather that a model without noise can’t, so a brain with noise can produce ideas that a brain without noise can’t. And just as these types of weather can be exceptional hurricanes, so the idea could end up winning you a Nobel Prize.

So, if you want to increase your chances of achieving something extraordinary, I’d recommend going for that walk in the countryside, looking up at the clouds, listening to the birds cheeping, and thinking about what you might eat for dinner.

So could computers be creative?

Will computers, one day, be as creative as Shakespeare, Bach or Einstein? Will they understand the world around us as we do? Stephen Hawking famously warned that AI will eventually take over and replace mankind.

However, the best-known advocate of the idea that computers will never understand as we do is Hawking’s old colleague, Roger Penrose. In making his claim, Penrose invokes an important “meta” theorem in mathematics known as Gödel’s theorem, which says there are mathematical truths that can’t be proven by deterministic algorithms.

There is a simple way of illustrating Gödel’s theorem. Suppose we make a list of all the most important mathematical theorems that have been proven since the time of the ancient Greeks. First on the list would be Euclid’s proof that there are an infinite number of prime numbers, which requires one really creative step (multiply the supposedly finite number of primes together and add one). Mathematicians would call this a “trick” – shorthand for a clever and succinct mathematical construction.

But is this trick useful for proving important theorems further down the list, like Pythagoras’s proof that the square root of two cannot be expressed as the ratio of two whole numbers? It’s clearly not; we need another trick for that theorem. Indeed, as you go down the list, you’ll find that a new trick is typically needed to prove each new theorem. It seems there is no end to the number of tricks that mathematicians will need to prove their theorems. Simply loading a given set of tricks on a computer won’t necessarily make the computer creative.

Does this mean mathematicians can breathe easily, knowing their jobs are not going to be taken over by computers? Well maybe not.

I have been arguing that we need computers to be noisy rather than entirely deterministic, “bit-reproducible” machines. And noise, especially if it comes from quantum mechanical processes, would break the assumptions of Gödel’s theorem: a noisy computer is not an algorithmic machine in the usual sense of the word.

Does this imply that a noisy computer can be creative? Alan Turing, pioneer of the general-purpose computing machine, believed this was possible, suggesting that “if a machine is expected to be infallible then it cannot also be intelligent”. That is to say, if we want the machine to be intelligent then it had better be capable of making mistakes.

Read more: Turing Test: why it still matters

Others may argue there is no evidence that simply adding noise will make an otherwise stupid machine into an intelligent one – and I agree, as it stands. Adding noise to a climate model doesn’t automatically make it an intelligent climate model.

However, the type of synergistic interplay between noise and determinism – the kind that sorts the wheat from the chaff of random ideas – has hardly yet been developed in computer codes. Perhaps we could develop a new type of AI model where the AI is trained by getting it to solve simple mathematical theorems using the clariton-anticlariton model; by making guesses and seeing if any of these have value.

For this to be at all tractable, the AI system would need to be trained to focus on “educated random guesses”. (If the machine’s guesses are all uneducated ones, it will take forever to make progress – like waiting for a group of monkeys to type the first few lines of Hamlet.)

For example, in the context of Euclid’s proof that there are an unlimited number of primes, could we train an AI system in such a way that a random idea like “multiply the assumed finite number of primes together and add one” becomes much more likely than the completely useless random idea “add the assumed finite number of primes together and subtract six”? And if a particular guess turns out to be especially helpful, can we train the AI system so that the next guess is a refinement of the last one?

If we can somehow find a way to do this, it could open up modelling to a completely new level that is relevant to all fields of study. And in so doing, we might yet reach the so-called “singularity” when machines take over from humans. But only when AI developers fully embrace the constructive role of noise – as it seems the brain did many thousands of years ago.

For now, I feel the need for another walk in the countryside. To blow away some fusty old cobwebs – and perhaps sow the seeds for some exciting new ones.

For you: more from our Insights series:

To hear about new Insights articles, join the hundreds of thousands of people who value The Conversation’s evidence-based news. Subscribe to our newsletter.

Tim Palmer receives funding from The Royal Society and from the European Research Council. His book, The Primacy of Doubt is published by Oxford University Press.

treatment covid-19 spread recovery europeanInternational

Net Zero, The Digital Panopticon, & The Future Of Food

Net Zero, The Digital Panopticon, & The Future Of Food

Authored by Colin Todhunter via Off-Guardian.org,

The food transition, the energy…

Authored by Colin Todhunter via Off-Guardian.org,

The food transition, the energy transition, net-zero ideology, programmable central bank digital currencies, the censorship of free speech and clampdowns on protest. What’s it all about? To understand these processes, we need to first locate what is essentially a social and economic reset within the context of a collapsing financial system.

Writer Ted Reece notes that the general rate of profit has trended downwards from an estimated 43% in the 1870s to 17% in the 2000s. By late 2019, many companies could not generate enough profit. Falling turnover, squeezed margins, limited cashflows and highly leveraged balance sheets were prevalent.

Professor Fabio Vighi of Cardiff University has described how closing down the global economy in early 2020 under the guise of fighting a supposedly new and novel pathogen allowed the US Federal Reserve to flood collapsing financial markets (COVID relief) with freshly printed money without causing hyperinflation. Lockdowns curtailed economic activity, thereby removing demand for the newly printed money (credit) in the physical economy and preventing ‘contagion’.

According to investigative journalist Michael Byrant, €1.5 trillion was needed to deal with the crisis in Europe alone. The financial collapse staring European central bankers in the face came to a head in 2019. The appearance of a ‘novel virus’ provided a convenient cover story.

The European Central Bank agreed to a €1.31 trillion bailout of banks followed by the EU agreeing to a €750 billion recovery fund for European states and corporations. This package of long-term, ultra-cheap credit to hundreds of banks was sold to the public as a necessary programme to cushion the impact of the pandemic on businesses and workers.

In response to a collapsing neoliberalism, we are now seeing the rollout of an authoritarian great reset — an agenda that intends to reshape the economy and change how we live.

SHIFT TO AUTHORITARIANISM

The new economy is to be dominated by a handful of tech giants, global conglomerates and e-commerce platforms, and new markets will also be created through the financialisation of nature, which is to be colonised, commodified and traded under the notion of protecting the environment.

In recent years, we have witnessed an overaccumulation of capital, and the creation of such markets will provide fresh investment opportunities (including dodgy carbon offsetting Ponzi schemes) for the super-rich to park their wealth and prosper.

This great reset envisages a transformation of Western societies, resulting in permanent restrictions on fundamental liberties and mass surveillance. Being rolled out under the benign term of a ‘Fourth Industrial Revolution’, the World Economic Forum (WEF) says the public will eventually ‘rent’ everything they require (remember the WEF video ‘you will own nothing and be happy’?): stripping the right of ownership under the guise of a ‘green economy’ and underpinned by the rhetoric of ‘sustainable consumption’ and ‘climate emergency’.

Climate alarmism and the mantra of sustainability are about promoting money-making schemes. But they also serve another purpose: social control.

Neoliberalism has run its course, resulting in the impoverishment of large sections of the population. But to dampen dissent and lower expectations, the levels of personal freedom we have been used to will not be tolerated. This means that the wider population will be subjected to the discipline of an emerging surveillance state.

To push back against any dissent, ordinary people are being told that they must sacrifice personal liberty in order to protect public health, societal security (those terrible Russians, Islamic extremists or that Sunak-designated bogeyman George Galloway) or the climate. Unlike in the old normal of neoliberalism, an ideological shift is occurring whereby personal freedoms are increasingly depicted as being dangerous because they run counter to the collective good.

The real reason for this ideological shift is to ensure that the masses get used to lower living standards and accept them. Consider, for instance, the Bank of England’s chief economist Huw Pill saying that people should ‘accept’ being poorer. And then there is Rob Kapito of the world’s biggest asset management firm BlackRock, who says that a “very entitled” generation must deal with scarcity for the first time in their lives.

At the same time, to muddy the waters, the message is that lower living standards are the result of the conflict in Ukraine and supply shocks that both the war and ‘the virus’ have caused.

The net-zero carbon emissions agenda will help legitimise lower living standards (reducing your carbon footprint) while reinforcing the notion that our rights must be sacrificed for the greater good. You will own nothing, not because the rich and their neoliberal agenda made you poor but because you will be instructed to stop being irresponsible and must act to protect the planet.

NET-ZERO AGENDA

But what of this shift towards net-zero greenhouse gas emissions and the plan to slash our carbon footprints? Is it even feasible or necessary?

Gordon Hughes, a former World Bank economist and current professor of economics at the University of Edinburgh, says in a new report that current UK and European net-zero policies will likely lead to further economic ruin.

Apparently, the only viable way to raise the cash for sufficient new capital expenditure (on wind and solar infrastructure) would be a two decades-long reduction in private consumption of up to 10 per cent. Such a shock has never occurred in the last century outside war; even then, never for more than a decade.

But this agenda will also cause serious environmental degradation. So says Andrew Nikiforuk in the article The Rising Chorus of Renewable Energy Skeptics, which outlines how the green techno-dream is vastly destructive.

He lists the devastating environmental impacts of an even more mineral-intensive system based on renewables and warns:

“The whole process of replacing a declining system with a more complex mining-based enterprise is now supposed to take place with a fragile banking system, dysfunctional democracies, broken supply chains, critical mineral shortages and hostile geopolitics.”

All of this assumes that global warming is real and anthropogenic. Not everyone agrees. In the article Global warming and the confrontation between the West and the rest of the world, journalist Thierry Meyssan argues that net zero is based on political ideology rather than science. But to state such things has become heresy in the Western countries and shouted down with accusations of ‘climate science denial’.

Regardless of such concerns, the march towards net zero continues, and key to this is the United Nations Agenda 2030 for Sustainable Development Goals.

Today, almost every business or corporate report, website or brochure includes a multitude of references to ‘carbon footprints’, ‘sustainability’, ‘net zero’ or ‘climate neutrality’ and how a company or organisation intends to achieve its sustainability targets. Green profiling, green bonds and green investments go hand in hand with displaying ‘green’ credentials and ambitions wherever and whenever possible.

It seems anyone and everyone in business is planting their corporate flag on the summit of sustainability. Take Sainsbury’s, for instance. It is one of the ‘big six’ food retail supermarkets in the UK and has a vision for the future of food that it published in 2019.

Here’s a quote from it:

“Personalised Optimisation is a trend that could see people chipped and connected like never before. A significant step on from wearable tech used today, the advent of personal microchips and neural laces has the potential to see all of our genetic, health and situational data recorded, stored and analysed by algorithms which could work out exactly what we need to support us at a particular time in our life. Retailers, such as Sainsbury’s could play a critical role to support this, arranging delivery of the needed food within thirty minutes — perhaps by drone.”

Tracked, traced and chipped — for your own benefit. Corporations accessing all of our personal data, right down to our DNA. The report is littered with references to sustainability and the climate or environment, and it is difficult not to get the impression that it is written so as to leave the reader awestruck by the technological possibilities.

However, the promotion of a brave new world of technological innovation that has nothing to say about power — who determines policies that have led to massive inequalities, poverty, malnutrition, food insecurity and hunger and who is responsible for the degradation of the environment in the first place — is nothing new.

The essence of power is conveniently glossed over, not least because those behind the prevailing food regime are also shaping the techno-utopian fairytale where everyone lives happily ever after eating bugs and synthetic food while living in a digital panopticon.

FAKE GREEN

The type of ‘green’ agenda being pushed is a multi-trillion market opportunity for lining the pockets of rich investors and subsidy-sucking green infrastructure firms and also part of a strategy required to secure compliance required for the ‘new normal’.

It is, furthermore, a type of green that plans to cover much of the countryside with wind farms and solar panels with most farmers no longer farming. A recipe for food insecurity.

Those investing in the ‘green’ agenda care first and foremost about profit. The supremely influential BlackRock invests in the current food system that is responsible for polluted waterways, degraded soils, the displacement of smallholder farmers, a spiralling public health crisis, malnutrition and much more.

It also invests in healthcare — an industry that thrives on the illnesses and conditions created by eating the substandard food that the current system produces. Did Larry Fink, the top man at BlackRock, suddenly develop a conscience and become an environmentalist who cares about the planet and ordinary people? Of course not.

Any serious deliberations on the future of food would surely consider issues like food sovereignty, the role of agroecology and the strengthening of family farms — the backbone of current global food production.

The aforementioned article by Andrew Nikiforuk concludes that, if we are really serious about our impacts on the environment, we must scale back our needs and simplify society.

In terms of food, the solution rests on a low-input approach that strengthens rural communities and local markets and prioritises smallholder farms and small independent enterprises and retailers, localised democratic food systems and a concept of food sovereignty based on self-sufficiency, agroecological principles and regenerative agriculture.

It would involve facilitating the right to culturally appropriate food that is nutritionally dense due to diverse cropping patterns and free from toxic chemicals while ensuring local ownership and stewardship of common resources like land, water, soil and seeds.

That’s where genuine environmentalism and the future of food begins.

Government

Five Aerospace Investments to Buy as Wars Worsen Copy

Five aerospace investments to buy as wars worsen give investors a chance to acquire shares of companies focused on fortifying national defense. The five…

Five aerospace investments to buy as wars worsen give investors a chance to acquire shares of companies focused on fortifying national defense.

The five aerospace investments to buy provide military products to help protect freedom amid Russia’s ongoing onslaught against Ukraine that began in February 2022, as well as supply arms in the Middle East used after Hamas militants attacked and murdered civilians in Israel on Oct. 7. Even though the S&P 500 recently reached all-time highs, these five aerospace investments have remained reasonably priced and rated as recommendations by seasoned analysts and a pension fund chairman.

State television broadcasts in Russia show the country’s soldiers advancing further into Ukrainian territory, but protests have occurred involving family members of those serving in perilous conditions in the invasion of their neighboring nation to be brought home. Even though hundreds of thousands of Russians also have fled to other countries to avoid compulsory military service, the aggressor’s President Vladimir Putin has vowed to continue to send additional soldiers into the fierce fighting.

While Russia’s land-grab of Crimea and other parts of Ukraine show no end in sight, Israel’s war with Hamas likely will last for at least additional months, according to the latest reports. United Nations’ leaders expressed alarm on Dec. 26 about intensifying Israeli attacks that killed more than 100 Palestinians over two days in part of the Gaza Strip, when 15 members of the Israel Defense Force (IDF) also lost their lives.

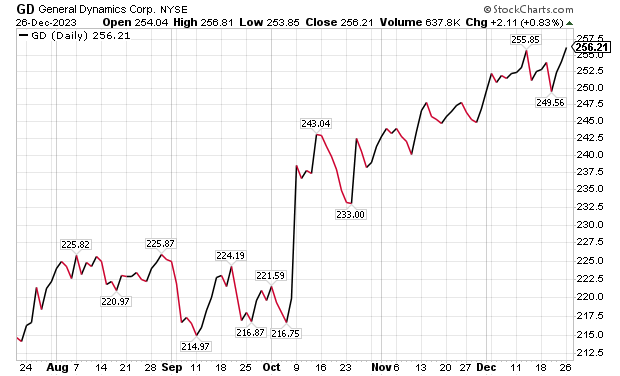

Five Aerospace Investments to Buy as Wars Worsen: General Dynamics

One of the five aerospace investments to buy as wars worsen is General Dynamics (NYSE: GD), a Reston, Virginia-based aerospace company with more than 100,000 employees in 70-plus countries. A key business unit of General Dynamics is Gulfstream Aerospace Corporation, a manufacturer of business aircraft. Other segments of General Dynamics focus on making military products such as Abrams tanks, Stryker fighting vehicles, ASCOD fighting vehicles like the Spanish PIZARRO and British AJAX, LAV-25 Light Armored Vehicles and Flyer-60 lightweight tactical vehicles.

For the U.S. Navy and other allied armed forces, General Dynamics builds Virginia-class attack submarines, Columbia-class ballistic missile submarines, Arleigh Burke-class guided missile destroyers, Expeditionary Sea Base ships, fleet logistics ships, commercial cargo ships, aircraft and naval gun systems, Hydra-70 rockets, military radios and command and control systems. In addition, the company provides radio and optical telescopes, secure mobile phones, PIRANHA and PANDUR wheeled armored vehicles and mobile bridge systems.

Chicago-based investment firm William Blair & Co. is among those recommending General Dynamics. The Chicago firm gave an “outperform” rating to General Dynamics in a Dec. 21 research note.

Gulfstream is seeking G700 FAA certification by the end of 2023, suggesting potentially positive news in the next 10 days, William Blair wrote in its recent research note. The investment firm projected that General Dynamics would trade upward upward upon the G700’s certification.

“General Dynamics’ 2023 aircraft delivery guidance of approximately 134 planes assumes that 19 G700s are delivered in the fourth quarter,” wrote William Blair’s aerospace and defense analyst Louie DiPalma. “Even if deliveries fall short of this target, we believe investors will take a glass-half-full approach upon receipt of the certification.”

Chart courtesy of www.stockcharts.com.

Five Aerospace Investments to Buy as Wars Worsen: GD Outlook

The G700 is a major focus area for investors because it is Gulfstream’s most significant aircraft introduction since the iconic G650 in 2012, DiPalma wrote. Gulfstream has the highest market share in the long-range jet segment of the private aircraft market, the highest profit margin of aircraft peers and the most premium business aviation brand, he added.

“The aircraft remains immensely popular today with corporations and high-net-worth individuals,” Di Palma wrote. “Elon Musk has reportedly placed an order for a G700 to go along with his existing G650. Qatar Airways announced at the Paris Air Show that 10 G700 aircraft will become part of its fleet.”

G700 deliveries and subsequent G800 deliveries are expected to be the cornerstone of Gulfstream’s growth and margin expansion for the next decade, DiPalma wrote. This should lead to a rebound in the stock price as the margins for the G700 and G800 are very attractive, he added.

Management’s guidance is for the aerospace operating margin to increase from about 13.2% in 2022 to roughly 14.0% in 2023 and 15.8% in 2024. Longer term, a high-teens profit margin appears within reach, DiPalma projected.

In other General Dynamics business segments, William Blair expects several yet-unannounced large contract awards for General Dynamics IT, to go along with C$1.7 billion, or US$1.29 billion, in General Dynamics Mission Systems contracts announced on Dec. 20 for the Canadian Army. General Dynamics shares are poised to have a strong 2024, William Blair wrote.

Five Aerospace Investments to Buy as Wars Worsen: VSE Corporation

Alexandria, Virginia-based VSE Corporation’s (NASDAQ: VSEC) price-to-earnings (P/E) valuation multiple of 22 received support when AAR Corp. (NYSE: AIR), a Wood Dale, Illinois, provider of aviation services, announced on Dec. 21 that it would acquire the product support business of Triumph Group (NYSE: TGI), a Berwyn, Pennsylvania, supplier of aerospace services, structures and systems. AAR’s purchase price of $725 million reflects confidence in a continued post-pandemic aerospace rebound.

VSE, a provider of aftermarket distribution and repair services for land, sea and air transportation assets used by government and commercial markets, is rated “outperform” by William Blair. The company’s core services include maintenance, repair and operations (MRO), parts distribution, supply chain management and logistics, engineering support, as well as consulting and training for global commercial, federal, military and defense customers.

“Robust consumer travel demand and aging aircraft fleets have driven elevated maintenance visits,” William Blair’s DiPalma wrote in a Dec. 21 research note. “The AAR–Triumph deal is valued at a premium 13-times 2024 EBITDA multiple, which was in line with the valuation multiple that Heico (NYSE: HEI) paid for Wencor over the summer.”

VSE currently trades at a discounted 9.5 times consensus 2024 earnings before interest, taxes, depreciation and amortization (EBITDA) estimates, as well as 11.6 times consensus 2023 EBITDA.

Five Aerospace Investments to Buy as Wars Worsen: VSE Undervalued?

“We expect that VSE shares will trend higher as investors process this deal,” DiPalma wrote. “VSE shares trade at 9.5 times consensus 2024 adjusted EBITDA, compared with peers and M&A comps in the 10-to-14-times range. We think that VSE’s multiple will expand as it closes the divestiture of its federal and defense business and makes strategic acquisitions. We see consistent 15% annual upside for shares as VSE continues to take share in the $110 billion aviation aftermarket industry.”

William Blair reaffirmed its “outperform” rating for VSE on Dec. 21. The main risk to VSE shares is lumpiness associated with its aviation services margins, Di Palma wrote. However, he raised 2024 estimates to further reflect commentary from VSE’s analysts’ day in November.

Chart courtesy of www.stockcharts.com.

Five Aerospace Investments to Buy as Wars Worsen: HEICO Corporation

HEICO Corporation (NYSEL: HEI), is a Hollywood, Florida-based technology-driven aerospace, industrial, defense and electronics company that also is ranked as an “outperform” investment by William Blair’s DiPalma. The aerospace aftermarket parts provider recently reported fourth-quarter financials above consensus analysts’ estimates, driven by 20% organic growth in HEICO’s flight support group.

HEICO’s management indicated that the performance of recently acquired Wencor is exceeding expectations. However, HEICO leaders offered color on 2024 organic growth and margin expectations that forecast reduced gains. Even though consensus estimates already assumed slowing growth, it is still not a positive for HEICO, DiPalma wrote.

William Blair forecasts 15% annual upside to HEICO’s shares, based on EBITDA growth. HEICO’s management cited a host of reasons for its quarterly outperformance, highlighted by the continued commercial air travel recovery. The company also referenced new product introductions and efficiency initiatives.

HEICO’s defense product sales increased by 26% sequentially, marking the third consecutive sequential increase in defense product revenue. The company’s leaders conveyed that defense in general is moving in the right direction to enhance financial performance.

Chart courtesy of www.stockcharts.com.

Five Dividend-paying Defense and Aerospace Investments to Purchase: XAR

A fourth way to obtain exposure to defense and aerospace investments is through SPDR S&P Aerospace and Defense ETF (XAR). That exchange-traded fund tracks the S&P Aerospace & Defense Select Industry Index. The fund is overweight in industrials and underweight in technology and consumer cyclicals, said Bob Carlson, a pension fund chairman who heads the Retirement Watch investment newsletter.

Bob Carlson, who heads Retirement Watch, answers questions from Paul Dykewicz.

XAR has 34 securities, and 44.2% of the fund is in the 10 largest positions. The fund is up 25.82% in the last 12 months, 22.03% in the past three months and 7.92% for the last month. Its dividend yield recently measured 0.38%.

The largest positions in the fund recently were Axon Enterprise (NASDAQ: AXON), Boeing (NYSE: BA), L3Harris Technologies (NYSE: LHX), Spirit Aerosystems (NYSE: SPR) and Virgin Galactic (NYSE: SPCE).

Chart courtesy of www.stockcharts.com

Five Dividend-paying Defense and Aerospace Investments to Purchase: PPA

The second fund recommended by Carlson is Invesco Aerospace & Defense ETF (PPA), which tracks the SPADE Defense Index. It has the same underweighting and overweighting as XAR, he said.

PPA recently held 52 securities and 53.2% of the fund was in its 10 largest positions. With so many holdings, the fund offers much reduced risk compared to buying individual stocks. The largest positions in the fund recently were Boeing (NYSE: BA), RTX Corp. (NYSE: RTX), Lockheed Martin (NYSE: LMT), Northrop Grumman (NYSE: NOC) and General Electric (NYSE:GE).

The fund is up 19.07% for the past year, 50.34% in the last three months and 5.30% during the past month. The dividend yield recently touched 0.69%.

Chart courtesy of www.stockcharts.com

Other Fans of Aerospace

Two fans of aerospace stocks are Mark Skousen, PhD, and seasoned stock picker Jim Woods. The pair team up to head the Fast Money Alert advisory service They already are profitable in their recent recommendation of Lockheed Martin (NYSE: LMT) in Fast Money Alert.

Mark Skousen, a scion of Ben Franklin, meets with Paul Dykewicz.

Jim Woods, a former U.S. Army paratrooper, co-heads Fast Money Alert.

Bryan Perry, who heads the Cash Machine investment newsletter and the Micro-Cap Stock Trader advisory service, recommends satellite services provider Globalstar (NYSE American: GSAT), of Covington, Louisiana, that has jumped 50.00% since he advised buying it two months ago. Perry is averaging a dividend yield of 11.14% in his Cash Machine newsletter but is breaking out with the red-hot recommendation of Globalstar in his Micro-Cap Stock Trader advisory service.

Bryan Perry heads Cash Machine, averaging an 11.14% dividend yield.

Military Equipment Demand Soars amid Multiple Wars

The U.S. military faces an acute need to adopt innovation, to expedite implementation of technological gains, to tap into the talents of people in various industries and to step-up collaboration with private industry and international partners to enhance effectiveness, U.S. Joint Chiefs of Staff Gen. Charles Q. Brown Jr. told attendees on Nov 16 at a national security conference. Prime examples of the need are showed by multiple raging wars, including the Middle East and Ukraine. A cold war involves China and its increasingly strained relationships with Taiwan and other Asian nations.

The shocking Oct. 7 attack by Hamas on Israel touched off an ongoing war in the Middle East, coupled with Russia’s February 2022 invasion and continuing assault of neighboring Ukraine. Those brutal military conflicts show the fragility of peace when determined aggressors are willing to use any means necessary to achieve their goals. To fend off such attacks, rapid and effective response is required.

“The Department of Defense is doing more than ever before to deter, defend, and, if necessary, defeat aggression,” Gen. Brown said at the National Security Innovation Forum at the Johns Hopkins University Bloomberg Center in Washington, D.C.

One of Russia’s war ships, the 360-foot-long Novocherkassk, was damaged on Dec. 26 by a Ukrainian attack on the Black Sea port of Feodosia in Crimea. This video of an explosion at the port that reportedly shows a section of the ship hit by aircraft-guided missiles.

Chairman Joint Chiefs of Staff Gen. Charles Q. Brown, Jr.

Photo By: Benjamin Applebaum

National security threats can compel immediate action, Gen. Brown said he quickly learned since taking his post on Oct. 1.

“We may not have much warning when the next fight begins,” Gen. Brown said. “We need to be ready.”

In a pre-recorded speech at the national security conference, Michael R. Bloomberg, founder of Bloomberg LP, told the John Hopkins national security conference attendees about the critical need for collaboration between government and industry.

“Building enduring technological advances for the U.S. military will help our service members and allies defend freedom across the globe,” Bloomberg said.

The “horrific terrorist attacks” against Israel and civilians living there on Oct. 7 underscore the importance of that mission, Bloomberg added.

Paul Dykewicz, www.pauldykewicz.com, is an accomplished, award-winning journalist who has written for Dow Jones, the Wall Street Journal, Investor’s Business Daily, USA Today, the Journal of Commerce, Seeking Alpha, Guru Focus and other publications and websites. Attention Holiday Gift Buyers! Consider purchasing Paul’s inspirational book, “Holy Smokes! Golden Guidance from Notre Dame’s Championship Chaplain,” with a foreword by former national championship-winning football coach Lou Holtz. The uplifting book is great gift and is endorsed by Joe Montana, Joe Theismann, Ara Parseghian, “Rocket” Ismail, Reggie Brooks, Dick Vitale and many others. Call 202-677-4457 for special pricing on multiple-book purchases or autographed copies! Follow Paul on Twitter @PaulDykewicz. He is the editor of StockInvestor.com and DividendInvestor.com, a writer for both websites and a columnist. He further is editorial director of Eagle Financial Publications in Washington, D.C., where he edits monthly investment newsletters, time-sensitive trading alerts, free e-letters and other investment reports. Paul previously served as business editor of Baltimore’s Daily Record newspaper, after writing for the Baltimore Business Journal and Crain Communications.

The post Five Aerospace Investments to Buy as Wars Worsen Copy appeared first on Stock Investor.

dow jones sp 500 nasdaq stocks pandemic etf micro-cap army recovery russia ukraine chinaGovernment

Health Officials: Man Dies From Bubonic Plague In New Mexico

Health Officials: Man Dies From Bubonic Plague In New Mexico

Authored by Jack Phillips via The Epoch Times (emphasis ours),

Officials in…

Authored by Jack Phillips via The Epoch Times (emphasis ours),

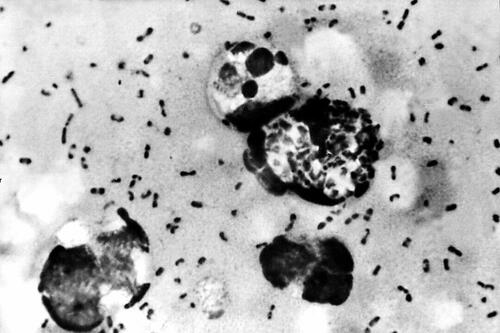

Officials in New Mexico confirmed that a resident died from the plague in the United States’ first fatal case in several years.

The New Mexico Department of Health, in a statement, said that a man in Lincoln County “succumbed to the plague.” The man, who was not identified, was hospitalized before his death, officials said.

They further noted that it is the first human case of plague in New Mexico since 2021 and also the first death since 2020, according to the statement. No other details were provided, including how the disease spread to the man.

The agency is now doing outreach in Lincoln County, while “an environmental assessment will also be conducted in the community to look for ongoing risk,” the statement continued.

“This tragic incident serves as a clear reminder of the threat posed by this ancient disease and emphasizes the need for heightened community awareness and proactive measures to prevent its spread,” the agency said.

A bacterial disease that spreads via rodents, it is generally spread to people through the bites of infected fleas. The plague, known as the black death or the bubonic plague, can spread by contact with infected animals such as rodents, pets, or wildlife.

The New Mexico Health Department statement said that pets such as dogs and cats that roam and hunt can bring infected fleas back into homes and put residents at risk.

Officials warned people in the area to “avoid sick or dead rodents and rabbits, and their nests and burrows” and to “prevent pets from roaming and hunting.”

“Talk to your veterinarian about using an appropriate flea control product on your pets as not all products are safe for cats, dogs or your children” and “have sick pets examined promptly by a veterinarian,” it added.

“See your doctor about any unexplained illness involving a sudden and severe fever, the statement continued, adding that locals should clean areas around their home that could house rodents like wood piles, junk piles, old vehicles, and brush piles.

The plague, which is spread by the bacteria Yersinia pestis, famously caused the deaths of an estimated hundreds of millions of Europeans in the 14th and 15th centuries following the Mongol invasions. In that pandemic, the bacteria spread via fleas on black rats, which historians say was not known by the people at the time.

Other outbreaks of the plague, such as the Plague of Justinian in the 6th century, are also believed to have killed about one-fifth of the population of the Byzantine Empire, according to historical records and accounts. In 2013, researchers said the Justinian plague was also caused by the Yersinia pestis bacteria.

But in the United States, it is considered a rare disease and usually occurs only in several countries worldwide. Generally, according to the Mayo Clinic, the bacteria affects only a few people in U.S. rural areas in Western states.

Recent cases have occurred mainly in Africa, Asia, and Latin America. Countries with frequent plague cases include Madagascar, the Democratic Republic of Congo, and Peru, the clinic says. There were multiple cases of plague reported in Inner Mongolia, China, in recent years, too.

Symptoms

Symptoms of a bubonic plague infection include headache, chills, fever, and weakness. Health officials say it can usually cause a painful swelling of lymph nodes in the groin, armpit, or neck areas. The swelling usually occurs within about two to eight days.

The disease can generally be treated with antibiotics, but it is usually deadly when not treated, the Mayo Clinic website says.

“Plague is considered a potential bioweapon. The U.S. government has plans and treatments in place if the disease is used as a weapon,” the website also says.

According to data from the U.S. Centers for Disease Control and Prevention, the last time that plague deaths were reported in the United States was in 2020 when two people died.

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoAll Of The Elements Are In Place For An Economic Crisis Of Staggering Proportions

-

International6 days ago

International6 days agoEyePoint poaches medical chief from Apellis; Sandoz CFO, longtime BioNTech exec to retire

-

Uncategorized4 weeks ago

Uncategorized4 weeks agoCalifornia Counties Could Be Forced To Pay $300 Million To Cover COVID-Era Program

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoApparel Retailer Express Moving Toward Bankruptcy

-

Uncategorized4 weeks ago

Uncategorized4 weeks agoIndustrial Production Decreased 0.1% in January

-

International6 days ago

International6 days agoWalmart launches clever answer to Target’s new membership program

-

Uncategorized4 weeks ago

Uncategorized4 weeks agoRFK Jr: The Wuhan Cover-Up & The Rise Of The Biowarfare-Industrial Complex

-

Uncategorized3 weeks ago

Uncategorized3 weeks agoGOP Efforts To Shore Up Election Security In Swing States Face Challenges